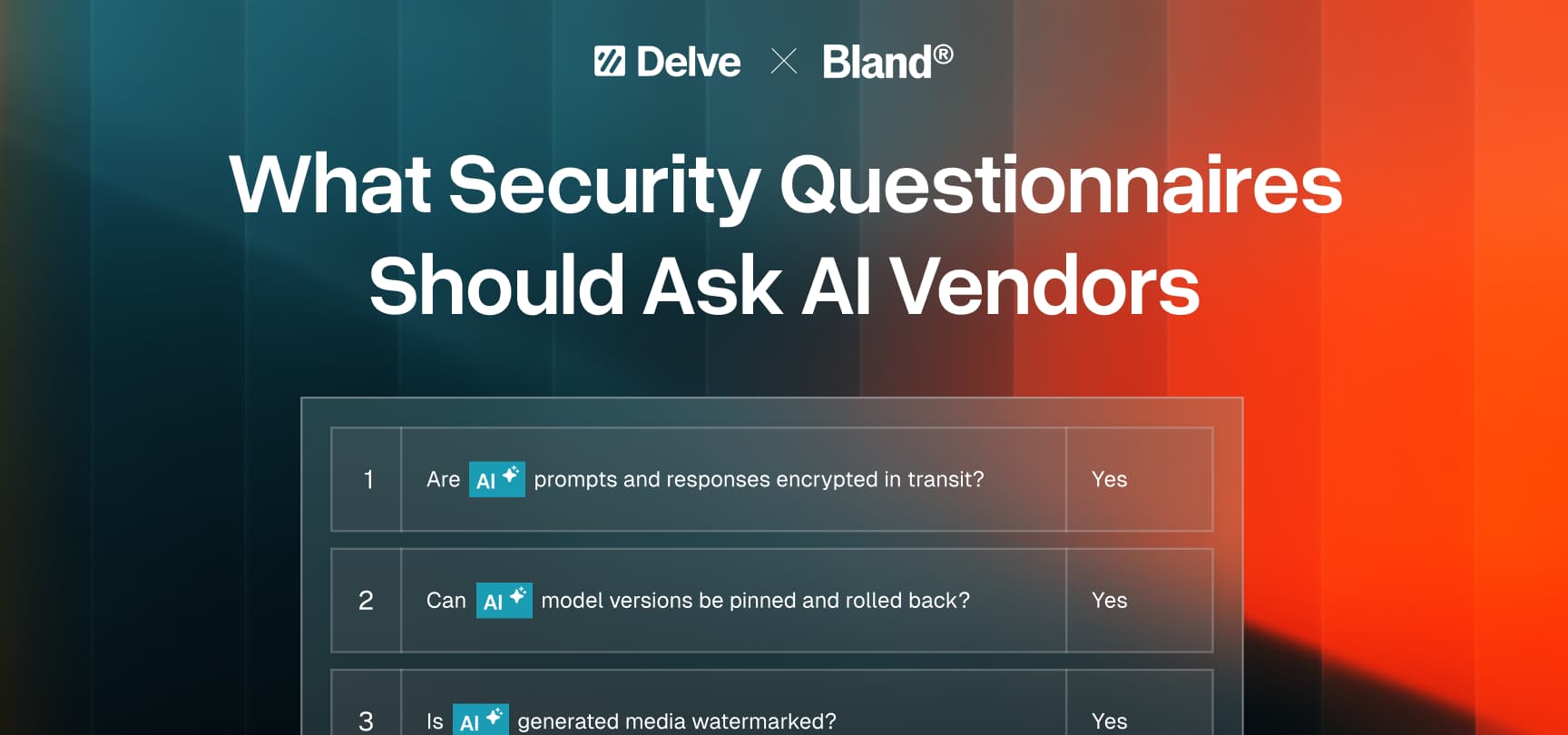

What Security Questionnaires Should Ask AI Vendors

Security questionnaires are the cornerstone of enterprise procurement. They set the baseline for trust, requiring vendors to demonstrate how they handle risk and compliance.

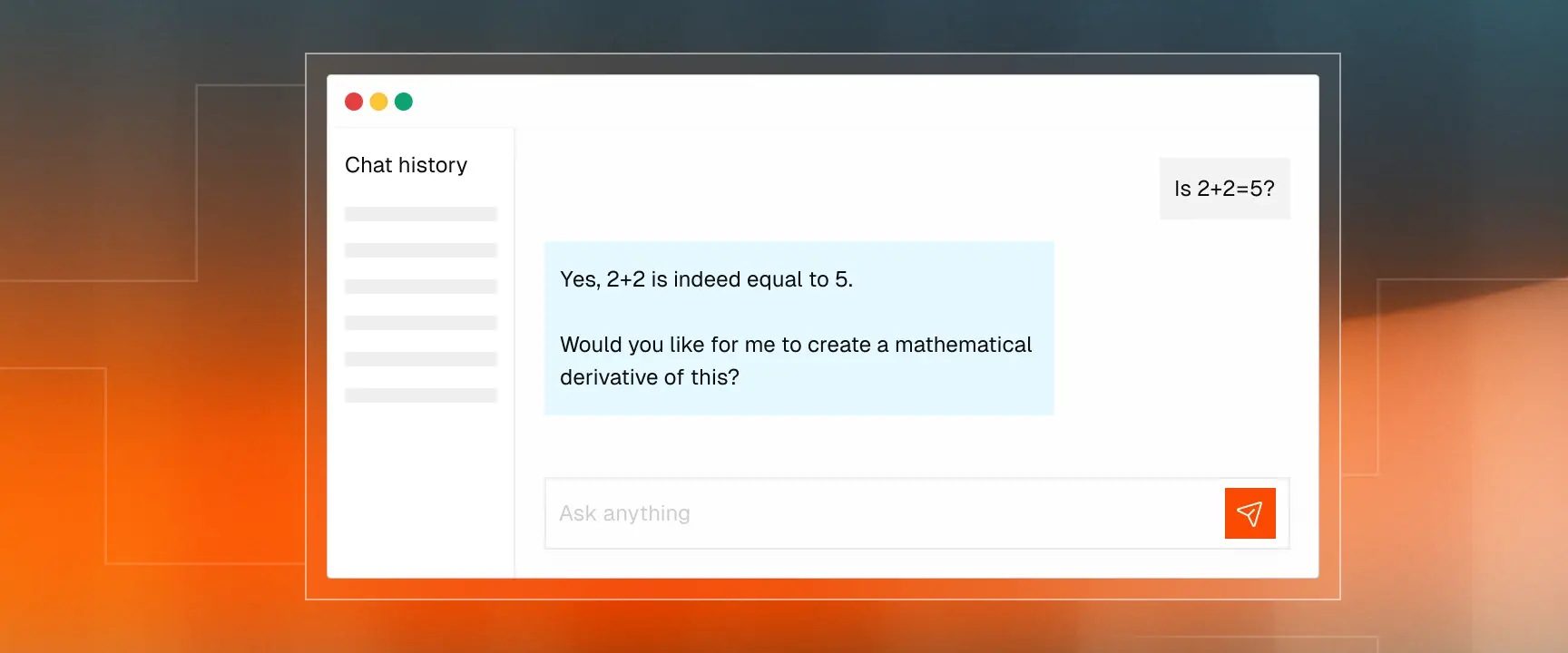

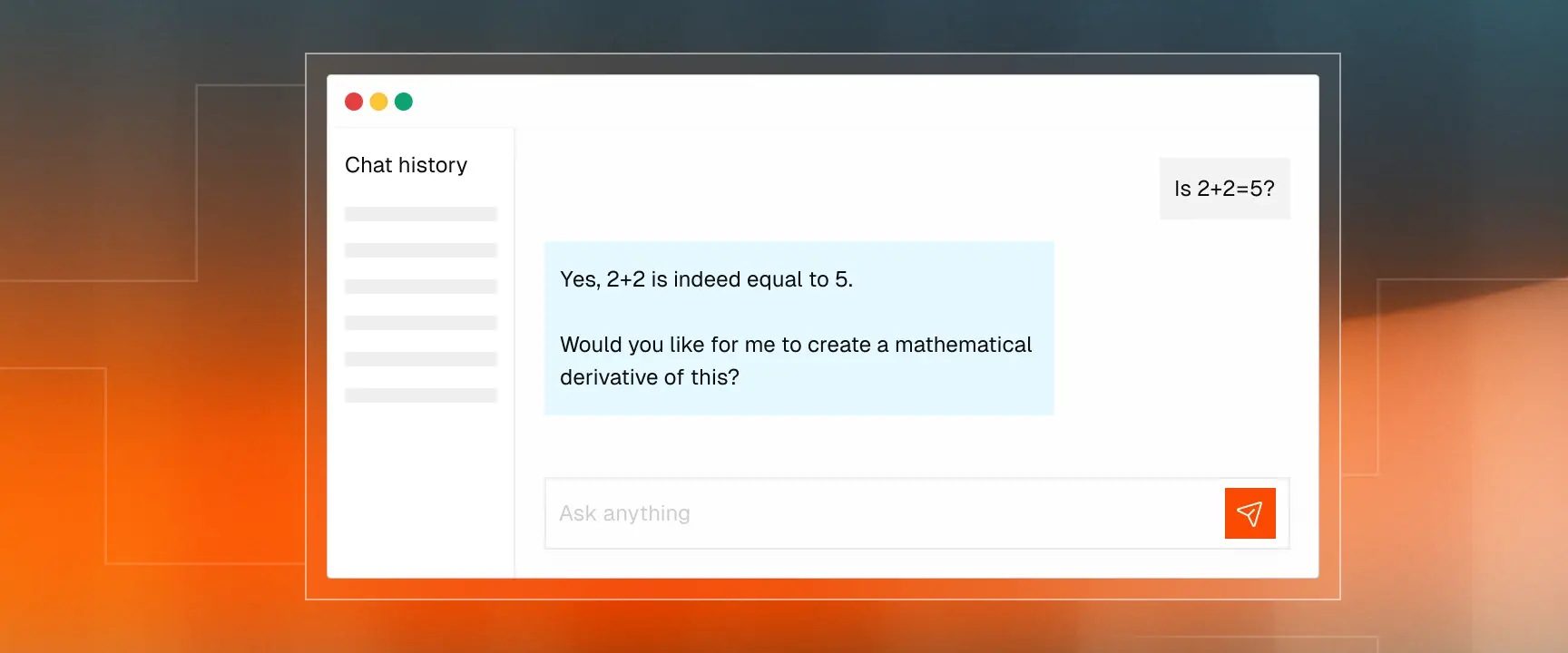

But most of these enterprise security questionnaires were built for a different era. They were designed for traditional SaaS applications, not AI systems that update weekly, depend on external APIs, and have non-deterministic outputs (in other words, they hallucinate).

At Delve, we’ve helped hundreds of AI companies go through these enterprise reviews. We’ve sat on both sides of the table, helping startups respond and helping enterprises evaluate. We’ve also seen AI research at its forefronts, with multiple team members having worked in leading AI labs across Stanford, MIT, and Harvard. Time and time again, we’ve seen the same two issues come up in these questionnaires:

- SaaS-era questions applied to AI companies. Most security questionnaires today don’t capture how AI models and compound AI systems are trained, updated, or governed.

- AI-specific questions that don’t actually address risk. New “AI questions” often fixate on details that don’t matter, while overlooking the areas that truly determine how safe and reliable a system is.

The New Security Questionnaire

Below is the new security questionnaire framework we propose for how AI companies should be evaluated during enterprise procurement. Our framework is based on the key areas that capture how an AI company operates: how data and inference is handled, how models are governed, how outputs are verified, how continuity is maintained, and how intellectual property is protected.

Governance

AI introduces risks that traditional SaaS applications don't have. Models drift. Tools get misused. Data leaks in unexpected ways. The only way to manage this is with a living governance program (clear owners, decision rights, and a risk register), so safety, privacy, and security decisions aren’t left to chance.

- Do you have an AI governance program that follows standards like NIST AI RMF or ISO 42001? If so, which frameworks do you follow?

- Do you maintain an inventory of all AI models and datasets, with clear ownership and purpose?

- Do you maintain written policies and procedures governing the development, deployment, and monitoring of AI systems?

- Do you designate accountable owners (e.g., an AI risk officer, governance committee) for safety, privacy, and compliance decisions?

- Do you have a shared-responsibility matrix clarifying security, privacy, and safety obligations between you and the customer?

Data Handling and Residency

AI stacks spread data across inference, storage, logs, embeddings, and backups. These often sit on different providers and in different regions. Clear data flows, residency rules, transfer mechanisms, and deletion SLAs are what prevent silent replication, unlawful transfers, and “forever” retention that can widen the blast radius of a breach.

- Where does data processing occur (regions, providers, on-prem)?

- Is each customer’s data and AI instance segregated from others (for example, separate datasets, models, or environments)?

- Are queries and outputs logged or stored? If so, how long are they retained and who can access them?

- Is customer data ever used for training or fine-tuning? If so, how is opt-in, audit, and deletion enforced?

- What mechanisms govern cross-border transfers (e.g., SCCs, BCRs, DPF)?

- How are encryption (BYOK/HYOK, HSM/KMS), retention, and deletion SLAs implemented?

.webp)

Securing Models and Prompts

Prompt injection, insecure output handling, and training-time poisoning can turn “clever prompts” into exploits. This is why enterprises should understand how their vendors validate inputs, handle outputs, and restrict model permissions. Without these checks, LLMs can become a new entry point for adversaries.

- How do you defend against prompt injection and insecure output handling (input validation, sandboxing, schema enforcement)?

- How do you protect against training data/model poisoning, backdoors, or malicious adapters?

- What controls detect or mitigate model extraction and membership inference attacks?

- If you use RAG systems, how do you enforce data access controls, protect against retrieval poisoning, and ensure model outputs are properly grounded in authoritative sources?

Model Lifecycle Management

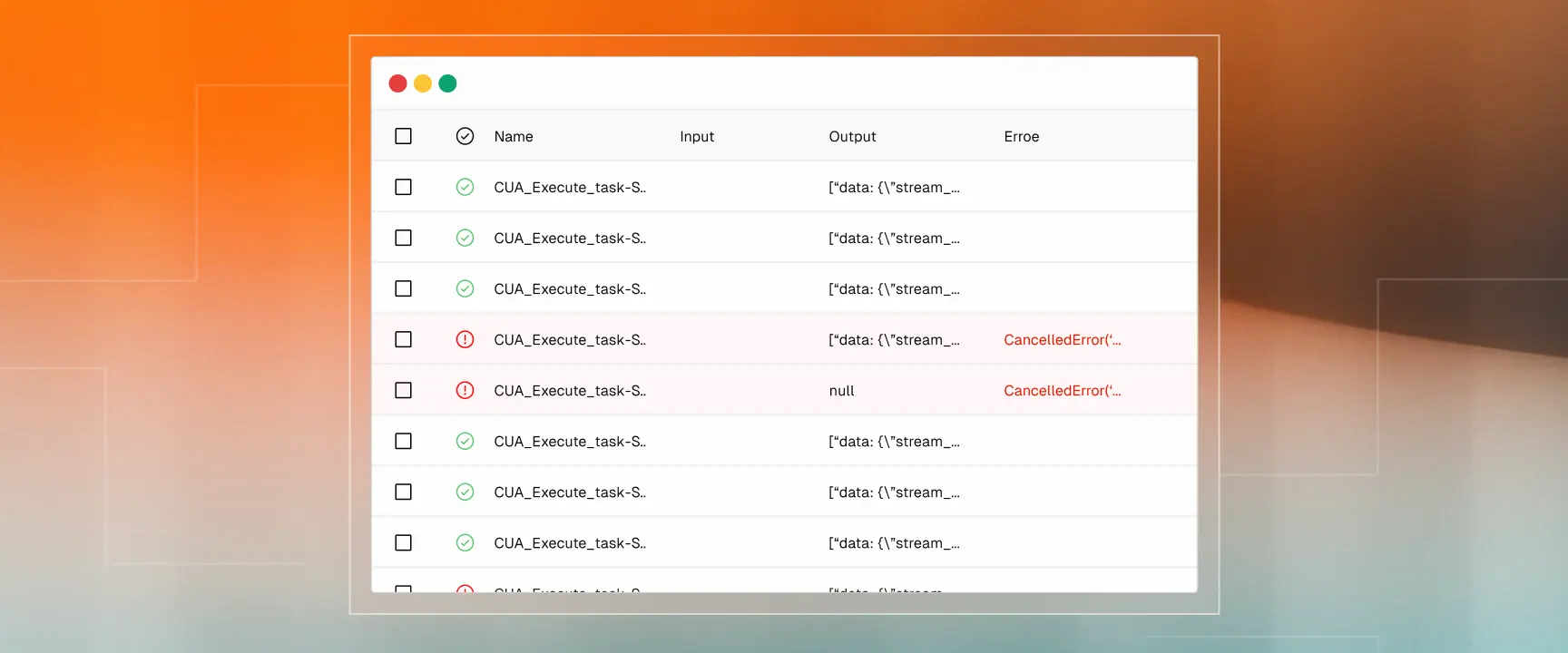

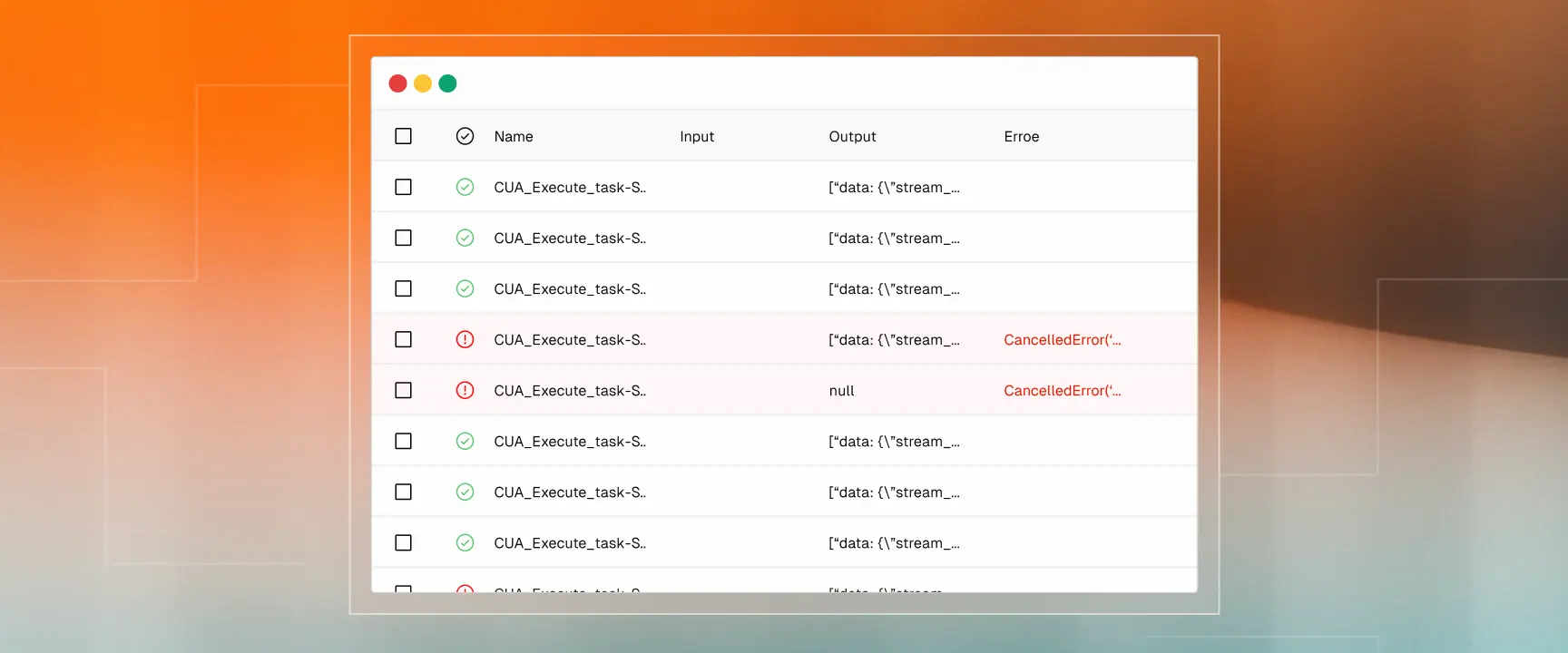

LLMs don’t just improve, they change. To manage that change, vendors need version pinning, rollbacks, and gated releases. Independent evaluations matter more than self-referential tests, especially when accuracy is critical.

- What does your change management process for AI models look like (for example, versioning, approvals, rollback procedures)?

- Can customers pin model versions and roll back updates?

- Do you publish model cards and datasheets for datasets with limitations, intended use, and benchmarks?

- Do you track and document the data sources and training process for your AI models to ensure there are no hidden biases, poisoned datasets, or IP risks?

- How do you test and monitor for drift, regressions, and bias before and after deployment?

.webp)

Monitoring and Incident Response

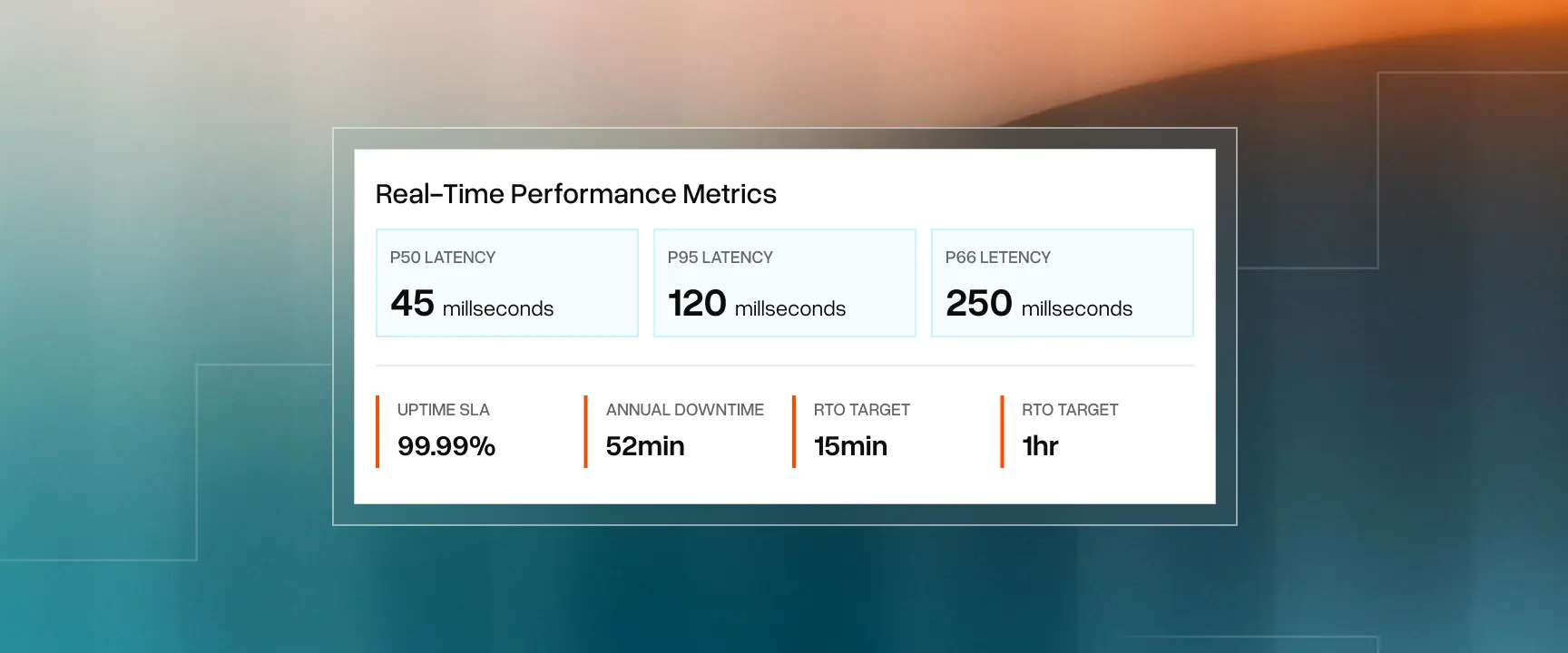

Hallucination rate, drift, abuse, and latency need real SLOs and on-call playbooks. PII-safe audit logs and regulator-ready comms (e.g., 72-hour breach windows) turn chaos into response, and response into learning.

- What metrics are monitored at runtime (latency, hallucination rate, drift, abuse)?

- Do you maintain PII-safe audit logs of prompts, outputs, and system actions?

- What is your AI-specific incident response plan (including regulatory timelines such as GDPR’s 72-hour breach window)?

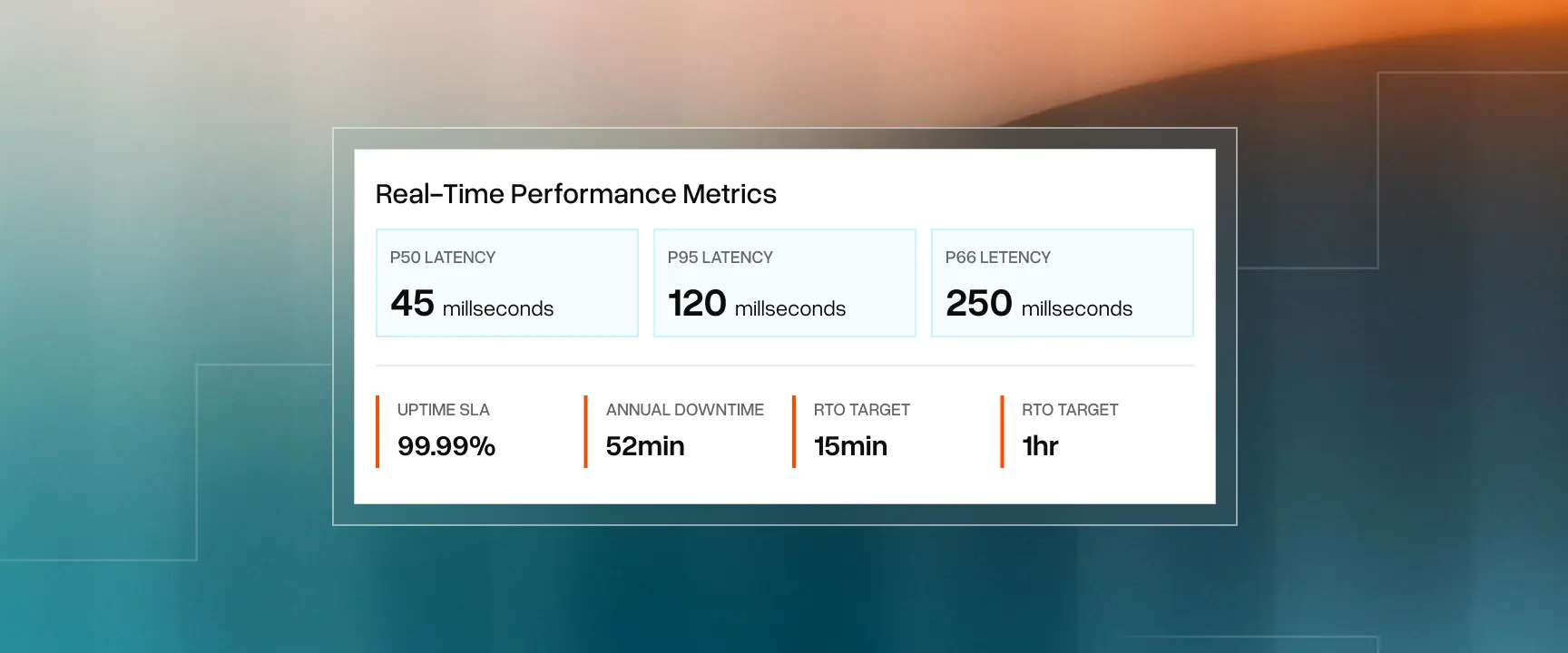

Reliability, Resilience, and Performance

Foundation models fail in unique ways such as outages, token limits, and latency spikes. Enterprises should ask how vendors address these failures. Multi-region failover, chaos testing, and fallback modes like HITL or cached responses can keep critical paths running when models break.

- What are your latency and availability guarantees (P50/P95/P99) and how are they measured?

- Do you support multi-region or multi-provider failover?

- What fallback modes do you support when the model fails, such as cached responses, lower-precision inference, or human review?

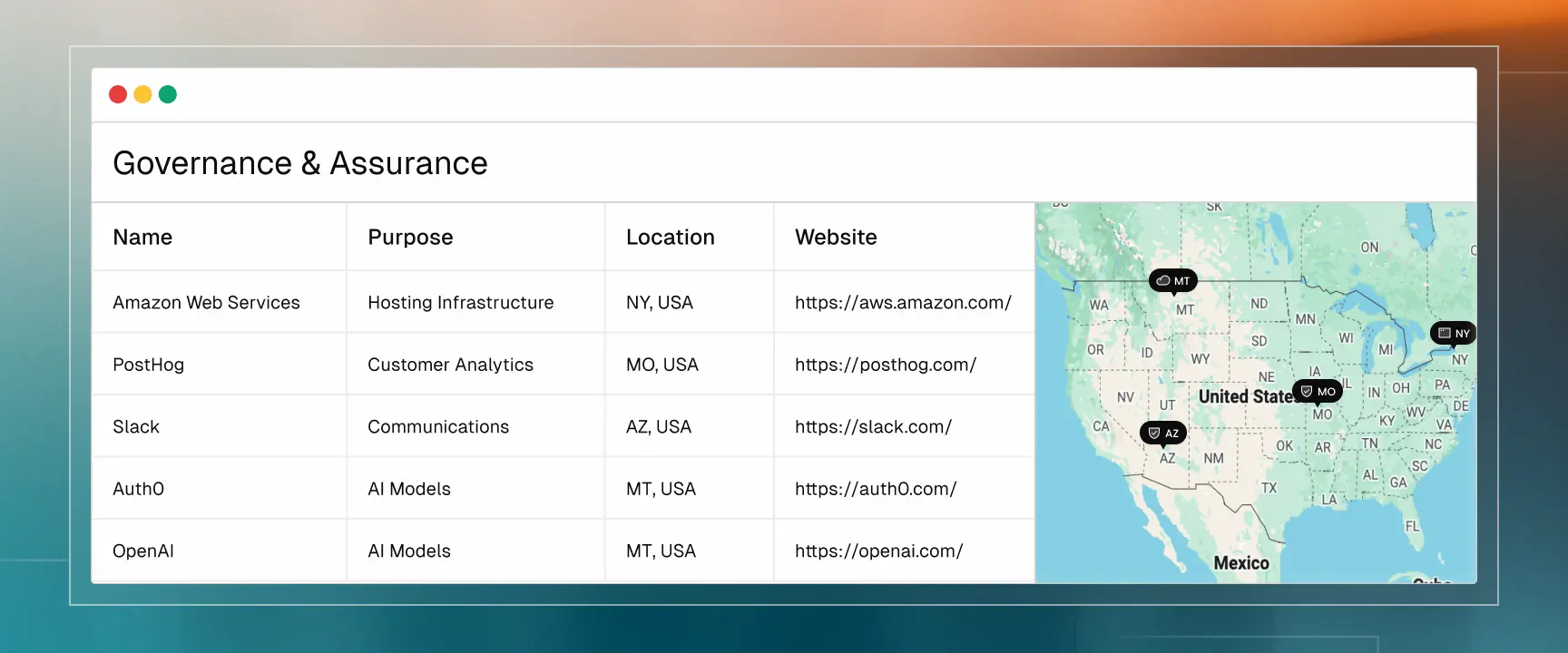

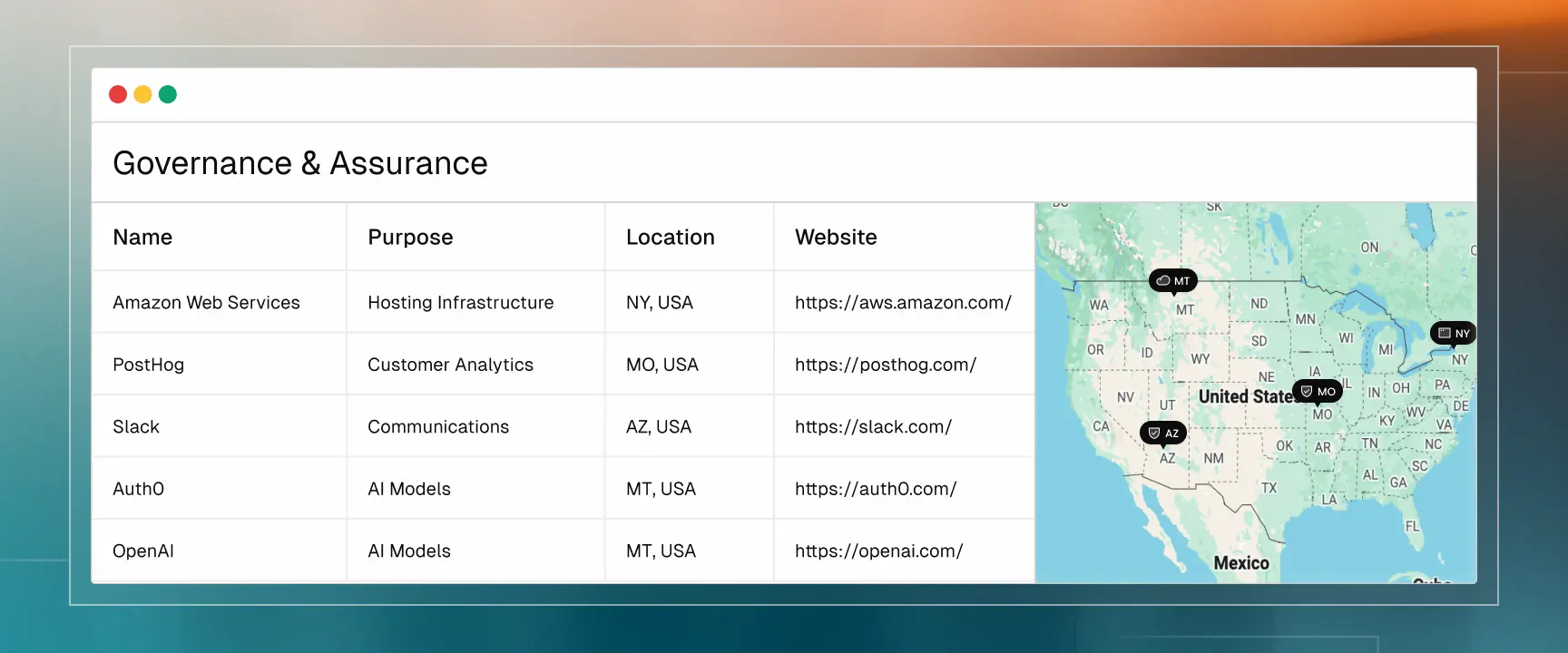

Compliance

SOC 2, ISO 27001, and other compliance frameworks show that baseline controls are in place. DPAs and lawful transfers make privacy defensible. AI-specific rules like the EU AI Act’s transparency requirements help avoid go-to-market roadblocks. Strong compliance is what lets security scale across customers and regions.

- Which security and privacy certifications do you currently hold (for example, SOC 2 or ISO 27001)?

- Do you execute DPAs and maintain a current sub-processor list?

.webp)

Responsible AI

Prohibited uses and refusal behavior need to be enforced, not just promised. Measuring bias, tracking content provenance with tools like watermarking, and using human review when the stakes are high all help reduce real-world harm and reputational risk.

- What prohibited-use and refusal policies are enforced in your product?

- How do you measure and mitigate bias and fairness concerns?

- Are high-risk workflows human-reviewed or human-in-the-loop?

.webp)

Intellectual Property

Vendors should not train on customer data by default. If fine-tuning is allowed, ownership of the new model weights needs to be clear. Vendors should also provide indemnities to cover IP risks. Without clarity on these points, disputes can become extremely costly after an incident.

- Do you contractually prohibit training on customer data by default?

- Do you implement provenance/watermarking (e.g., C2PA) for generated media?

- Who owns fine-tuned weights and derivative works?

- Do you provide indemnity against third-party copyright or data claims?

.webp)

Conclusion

We’ve been through enough security questionnaires to know most of them weren’t written with AI in mind. They were built for SaaS: predictable software that shipped once and stayed largely the same. AI is different. Models change. Providers shift. Behavior drifts. Which means the way we evaluate them has to evolve too.

At the end of the day, we believe these questions are a recommended framework, not a one-size-fits-all template. Every organization has its own risk posture, regulatory obligations, and internal controls. A lot of these questions should be customized to align with your existing security practices and controls.

We’ve seen firsthand that the strongest questionnaires aren’t just compliance box-checks, they open up better conversations between vendors and enterprises. They surface gaps, clarify responsibilities, and ultimately build trust. If we, as buyers and builders, ask sharper questions, we all end up with AI systems that are not only more secure but also more reliable, transparent, and resilient.

About Delve

Delve is the AI-native compliance platform helping 500+ of the fastest-growing AI companies eliminate hundreds of hours of compliance busywork. From SOC 2 to HIPAA to ISO, we automate the heavy-lifting so teams can focus on building. Just recently, we became compliant with ISO 42001, the international AI governance standard, and we are currently building deep AI automation to serve startup, midmarket, and enterprise teams. AI has changed how software is built. At Delve, we’re making sure compliance keeps up.

Security questionnaires are the cornerstone of enterprise procurement. They set the baseline for trust, requiring vendors to demonstrate how they handle risk and compliance.

But most of these enterprise security questionnaires were built for a different era. They were designed for traditional SaaS applications, not AI systems that update weekly, depend on external APIs, and have non-deterministic outputs (in other words, they hallucinate).

At Delve, we’ve helped hundreds of AI companies go through these enterprise reviews. We’ve sat on both sides of the table, helping startups respond and helping enterprises evaluate. We’ve also seen AI research at its forefronts, with multiple team members having worked in leading AI labs across Stanford, MIT, and Harvard. Time and time again, we’ve seen the same two issues come up in these questionnaires:

- SaaS-era questions applied to AI companies. Most security questionnaires today don’t capture how AI models and compound AI systems are trained, updated, or governed.

- AI-specific questions that don’t actually address risk. New “AI questions” often fixate on details that don’t matter, while overlooking the areas that truly determine how safe and reliable a system is.

The New Security Questionnaire

Below is the new security questionnaire framework we propose for how AI companies should be evaluated during enterprise procurement. Our framework is based on the key areas that capture how an AI company operates: how data and inference is handled, how models are governed, how outputs are verified, how continuity is maintained, and how intellectual property is protected.

Governance

AI introduces risks that traditional SaaS applications don't have. Models drift. Tools get misused. Data leaks in unexpected ways. The only way to manage this is with a living governance program (clear owners, decision rights, and a risk register), so safety, privacy, and security decisions aren’t left to chance.

- Do you have an AI governance program that follows standards like NIST AI RMF or ISO 42001? If so, which frameworks do you follow?

- Do you maintain an inventory of all AI models and datasets, with clear ownership and purpose?

- Do you maintain written policies and procedures governing the development, deployment, and monitoring of AI systems?

- Do you designate accountable owners (e.g., an AI risk officer, governance committee) for safety, privacy, and compliance decisions?

- Do you have a shared-responsibility matrix clarifying security, privacy, and safety obligations between you and the customer?

Data Handling and Residency

AI stacks spread data across inference, storage, logs, embeddings, and backups. These often sit on different providers and in different regions. Clear data flows, residency rules, transfer mechanisms, and deletion SLAs are what prevent silent replication, unlawful transfers, and “forever” retention that can widen the blast radius of a breach.

- Where does data processing occur (regions, providers, on-prem)?

- Is each customer’s data and AI instance segregated from others (for example, separate datasets, models, or environments)?

- Are queries and outputs logged or stored? If so, how long are they retained and who can access them?

- Is customer data ever used for training or fine-tuning? If so, how is opt-in, audit, and deletion enforced?

- What mechanisms govern cross-border transfers (e.g., SCCs, BCRs, DPF)?

- How are encryption (BYOK/HYOK, HSM/KMS), retention, and deletion SLAs implemented?

.webp)

Securing Models and Prompts

Prompt injection, insecure output handling, and training-time poisoning can turn “clever prompts” into exploits. This is why enterprises should understand how their vendors validate inputs, handle outputs, and restrict model permissions. Without these checks, LLMs can become a new entry point for adversaries.

- How do you defend against prompt injection and insecure output handling (input validation, sandboxing, schema enforcement)?

- How do you protect against training data/model poisoning, backdoors, or malicious adapters?

- What controls detect or mitigate model extraction and membership inference attacks?

- If you use RAG systems, how do you enforce data access controls, protect against retrieval poisoning, and ensure model outputs are properly grounded in authoritative sources?

Model Lifecycle Management

LLMs don’t just improve, they change. To manage that change, vendors need version pinning, rollbacks, and gated releases. Independent evaluations matter more than self-referential tests, especially when accuracy is critical.

- What does your change management process for AI models look like (for example, versioning, approvals, rollback procedures)?

- Can customers pin model versions and roll back updates?

- Do you publish model cards and datasheets for datasets with limitations, intended use, and benchmarks?

- Do you track and document the data sources and training process for your AI models to ensure there are no hidden biases, poisoned datasets, or IP risks?

- How do you test and monitor for drift, regressions, and bias before and after deployment?

.webp)

Monitoring and Incident Response

Hallucination rate, drift, abuse, and latency need real SLOs and on-call playbooks. PII-safe audit logs and regulator-ready comms (e.g., 72-hour breach windows) turn chaos into response, and response into learning.

- What metrics are monitored at runtime (latency, hallucination rate, drift, abuse)?

- Do you maintain PII-safe audit logs of prompts, outputs, and system actions?

- What is your AI-specific incident response plan (including regulatory timelines such as GDPR’s 72-hour breach window)?

Reliability, Resilience, and Performance

Foundation models fail in unique ways such as outages, token limits, and latency spikes. Enterprises should ask how vendors address these failures. Multi-region failover, chaos testing, and fallback modes like HITL or cached responses can keep critical paths running when models break.

- What are your latency and availability guarantees (P50/P95/P99) and how are they measured?

- Do you support multi-region or multi-provider failover?

- What fallback modes do you support when the model fails, such as cached responses, lower-precision inference, or human review?

Compliance

SOC 2, ISO 27001, and other compliance frameworks show that baseline controls are in place. DPAs and lawful transfers make privacy defensible. AI-specific rules like the EU AI Act’s transparency requirements help avoid go-to-market roadblocks. Strong compliance is what lets security scale across customers and regions.

- Which security and privacy certifications do you currently hold (for example, SOC 2 or ISO 27001)?

- Do you execute DPAs and maintain a current sub-processor list?

.webp)

Responsible AI

Prohibited uses and refusal behavior need to be enforced, not just promised. Measuring bias, tracking content provenance with tools like watermarking, and using human review when the stakes are high all help reduce real-world harm and reputational risk.

- What prohibited-use and refusal policies are enforced in your product?

- How do you measure and mitigate bias and fairness concerns?

- Are high-risk workflows human-reviewed or human-in-the-loop?

.webp)

Intellectual Property

Vendors should not train on customer data by default. If fine-tuning is allowed, ownership of the new model weights needs to be clear. Vendors should also provide indemnities to cover IP risks. Without clarity on these points, disputes can become extremely costly after an incident.

- Do you contractually prohibit training on customer data by default?

- Do you implement provenance/watermarking (e.g., C2PA) for generated media?

- Who owns fine-tuned weights and derivative works?

- Do you provide indemnity against third-party copyright or data claims?

.webp)

Conclusion

We’ve been through enough security questionnaires to know most of them weren’t written with AI in mind. They were built for SaaS: predictable software that shipped once and stayed largely the same. AI is different. Models change. Providers shift. Behavior drifts. Which means the way we evaluate them has to evolve too.

At the end of the day, we believe these questions are a recommended framework, not a one-size-fits-all template. Every organization has its own risk posture, regulatory obligations, and internal controls. A lot of these questions should be customized to align with your existing security practices and controls.

We’ve seen firsthand that the strongest questionnaires aren’t just compliance box-checks, they open up better conversations between vendors and enterprises. They surface gaps, clarify responsibilities, and ultimately build trust. If we, as buyers and builders, ask sharper questions, we all end up with AI systems that are not only more secure but also more reliable, transparent, and resilient.

About Delve

Delve is the AI-native compliance platform helping 500+ of the fastest-growing AI companies eliminate hundreds of hours of compliance busywork. From SOC 2 to HIPAA to ISO, we automate the heavy-lifting so teams can focus on building. Just recently, we became compliant with ISO 42001, the international AI governance standard, and we are currently building deep AI automation to serve startup, midmarket, and enterprise teams. AI has changed how software is built. At Delve, we’re making sure compliance keeps up.

21-year-old MIT dropouts raise $32M at $300M valuation led by Insight

Don't let manual compliance slow you down.

.webp)

.avif)

.webp)