Why MCP is the Future of AI Data Strategy

Summary

- Model Context Protocol (MCP) will define how AI agents access enterprise data. But without governance built into your data platform, sandbox controls limiting what agents can reach, and invisible compliance running automatically, MCP becomes a pipeline for data leakage, regulatory fines, and audit failures.

- MCP handles AI-to-data connections while Google's A2A protocol handles agent-to-agent collaboration; both will coexist, and both require governance at the data platform layer

- Critical MCP vulnerabilities are documented in tools with 500,000+ downloads, and the EU AI Act imposes penalties up to 7% of global revenue for AI governance failures.

- Treat AI data access like a gun range, not a library: data is checked out in controlled environments, sessions are time-bound, nothing leaves the sandbox, and every action is logged for data lineage.

- Invisible governance is the goal: users work without thinking about controls while audit trails answer the question regulators will ask: what did the AI agent do with that data?

- Complex approval workflows push employees toward shadow AI. Invisible governance fixes this: compliance runs automatically while users work without interruption

- The data platform team should own AI governance, not security, because they control where data lives, how it moves, and where agents connect

- Start with inventory: catalog every AI agent connection, identify ungoverned data sources, assign ownership to the data platform team, then implement sandboxes and continuous monitoring

The MCP Data Strategy Gap: What Enterprises Are Missing

Why MCP Changes AI Data Access

Somewhere in your organization right now, an employee just connected their Google Drive to an AI assistant. No ticket. No approval. No audit trail.

Model Context Protocol (MCP) made it effortless. And that employee just created an ungoverned pipeline between your sensitive data and a third-party AI model.

MCP is the future of AI data strategy because it solves a fundamental problem: connecting AI agents to tools and databases without custom integrations for every system.

Anthropic designed MCP as the universal connector. Think of it as "USB-C for AI applications" that standardizes how large language models access external context.

Microsoft integrated MCP support across Copilot Studio and Azure AI Foundry. Pre-built servers exist for Google Drive, Slack, GitHub, Postgres, and dozens more. This ecosystem expands weekly.

The MCP Security Gap: Authentication Failures

But that future has a gap. A significant one.

MCP prioritizes connectivity over governance. The protocol lets AI agents discover and invoke tools dynamically at runtime. This makes it powerful. It also makes it dangerous.

Authentication in MCP is often optional. The MCP authorization specification allows servers to use OAuth 2.0, but adoption remains limited.

Security researchers have found production MCP servers with direct database access, exposed to internal networks, requiring zero authentication.

Documented MCP Vulnerabilities: Real-World Exploits

These risks are not hypothetical. Security researchers have already found serious flaws in widely used MCP tools, documented in the CVE vulnerability database.

One vulnerability (CVE-2025-49596) affected MCP Inspector, Anthropic's official debugging tool with 38,000+ weekly downloads. The flaw let attackers run malicious code on a user's computer simply by tricking them into visiting a malicious website.

Another (CVE-2025-6514) affected mcp-remote, an authentication helper with 558,000+ downloads featured in guides from Cloudflare and Auth0. Attackers could hijack the tool to execute commands on the user's system.

MCP and the OWASP Top 10 for LLM Applications

The OWASP Top 10 for LLM Applications ranks prompt injection as the number one security risk.

In MCP environments, successful prompt injections do more than generate bad text. They trigger automated actions across every connected system. An attacker who manipulates an MCP-enabled agent can instruct it to steal data, modify records, or take actions the user never intended.

Every new MCP connection expands the attack surface.

AI Data Strategy Compliance: EU AI Act Exposure

The governance gap now carries regulatory exposure.

The EU AI Act imposes penalties up to 7% of global revenue for violations involving high-risk AI systems. GPAI transparency requirements became mandatory in August 2025.

Regulators will ask: How did AI agents access sensitive data? What did they do with it? Where is the audit trail?

"We didn't know" is not an acceptable answer.

MCP is the future of AI data strategy. But only for organizations that close the gap between what the protocol enables and what their governance covers.

The Sandbox Model: Securing MCP Data Access

Why Traditional Data Governance Fails with MCP

Here is a mental model that changes how you think about AI governance: treat data access like a gun range, not a library.

The library model describes how most enterprises govern data today. Information sits in repositories. Users get credentials. They check out what they need, use it anywhere, and governance means logging who borrowed what.

The assumption is that, once authenticated, users handle data appropriately. Policies exist. Security awareness training happens annually. But the architecture assumes good faith.

The Gun Range Model for AI Data Strategy

The gun range model operates differently. It is specifically necessary for MCP because the protocol enables what traditional governance never anticipated: persistent, dynamic connections that users create without IT involvement.

Guns can be dangerous. That's why gun ranges have rules. The environment enforces accountability because trust alone isn't enough. You do not take firearms home. You inspect them, use them in a controlled environment under supervision, and return them. Nothing leaves the premises. Every round is accounted for.

AI agents accessing your data can be dangerous too. They operate at machine speed across every connected system. The sandbox model applies the same discipline of controlled access, time-bound sessions, and full audit trails. The architecture enforces governance because policy documents alone aren't enough.

The range operator knows exactly what happens in the range because the environment enforces accountability.

.webp)

How MCP Breaks Legacy Data Access Models

MCP breaks the library model. It enables persistent, dynamic connections that traditional data governance architectures never anticipated.

Before MCP, integrations were static. IT built them. IT controlled them. IT monitored them. Connections were fixed pipelines with known endpoints.

MCP changes this. Users spin up connections on demand. AI agents discover and invoke tools at runtime. The "checkout" never ends. Data gets copied, not borrowed, often to places the organization cannot see.

Implementing Sandbox Architecture for MCP Governance

The gun range model provides the answer.

Data is accessed within sandboxed environments under complete organizational control. AI agents operate within defined boundaries with scoped permissions. Sessions have time limits with automatic revocation.

Every agent action is logged, monitored, and tied to a specific user, session, and purpose. When sessions end, access ends. Nothing persists.

.webp)

MCP Audit Trails: Answering the Regulator's Question

This approach answers the audit question regulators will ask: what did the AI agent do with that data?

Without sandbox architecture, you reconstruct events from fragmented logs. With it, you have a chain of custody. You know what entered, what happened, and what left. The receipts exist because the architecture generates them.

Technical Requirements for MCP Sandbox Implementation

Implementation requires policy-as-code frameworks such as Rego, YAML-based policies, or OSCAL that evaluate access dynamically based on context rather than static role assignments.

It requires behavioural analytics integrated with your SIEM to detect anomalous agent behaviour before data leaves the environment.

It requires data lineage tracking through every AI interaction, transformation, and output.

The tooling exists. The gap is between architectural commitment and organizational ownership.

The Accessibility Paradox: Why More Tools Hurt AI Data Governance

The Encyclopedia Britannica Problem in AI Governance

In the 1990s, Encyclopedia Britannica sat on shelves in nearly every library and many homes. Everyone had access to comprehensive knowledge.

Almost nobody used it regularly.

The barrier was not availability. It was friction. Finding information meant knowing which volume to pull, which entry to find, and how to navigate cross-references. Access was abundant. Usability was not.

The same paradox now strangles AI governance.

Tool Overload in Enterprise AI Data Strategy

Organizations have more governance tools than ever. Data catalogs track assets. Access management platforms control permissions. Compliance dashboards report adherence. Classification engines label sensitive content.

The governance stack has never been more comprehensive. Yet governance is more complex, not easier.

The governance stack has never been more comprehensive. Yet governance is harder, not easier. Why? The story is hard to read because he tools don't connect. Your data catalog doesn't talk to your access management platform. Your compliance dashboard doesn't see what your classification engine labeled. Your risks are not data-driven; they are locked in spreadsheets. When an auditor asks "trace this AI agent's data access," you're manually stitching together logs from five systems that were never designed to share context. More tools, worse traceability.

How Shadow AI Proliferates Despite MCP Governance Tools

Every new tool adds cognitive load.

Employees wanting to connect an AI agent to data now face approval workflows, classification requirements, access requests, and compliance acknowledgements. The process designed to ensure governance instead ensures avoidance.

Users work around complexity and friction because it reduces productivity. Shadow AI proliferates not because employees are malicious but because sanctioned AI is too cumbersome.

Governance tools meant to provide clarity, push activity into shadow channels.

Invisible Governance: The Goal of MCP Data Strategy

This is the accessibility paradox: more governance tooling often produces worse outcomes.

The goal of MCP governance is not to add more tools. It is invisible governance. This is the pattern Delve has observed across hundreds of enterprise implementations: governance that adds friction fails. Governance that abstracts complexity (or creates clarity) succeeds.

Invisible governance means users experience simplicity while controls run automatically.

An employee connects an AI agent. Behind the scenes: policies evaluate the request, access scopes limit visibility, session parameters define duration, behavioral monitoring watches for anomalies, and audit logs capture actions.

Users rarely consider PII, PHI, or audit trails. They work. Governance operates invisibly because it is woven in architecture, not layered on top.

AI-Native Platforms vs. Retrofitted MCP Governance

Invisible governance cannot be added later. It is built from the start.

AI-Native Platforms understand this. They expect agents to request data dynamically, at machine speed, across unpredictable paths. Legacy platforms do not. They were built for humans clicking through workflows, not agents making hundreds of calls per minute.

Retrofitting governance onto legacy architecture creates the friction that pushes users toward shadow AI. The Britannica lesson holds: access nobody uses is access wasted. Governance nobody follows is governance failed.

Building MCP Governance into Your Data Platform

Why AI Governance Belongs to the Data Platform Team

The biggest mistake with AI governance: treating it as a security initiative.

Security teams enforce policy. They monitor violations. They respond to incidents. But they cannot architect the foundation governance operates on.

The data platform team controls where data lives, how it moves, and who accesses it at the source. They understand lineage because they built the pipelines. They own the layer where AI agents connect.

MCP governance belongs with the team that owns the data platform, not the team monitoring the perimeter.

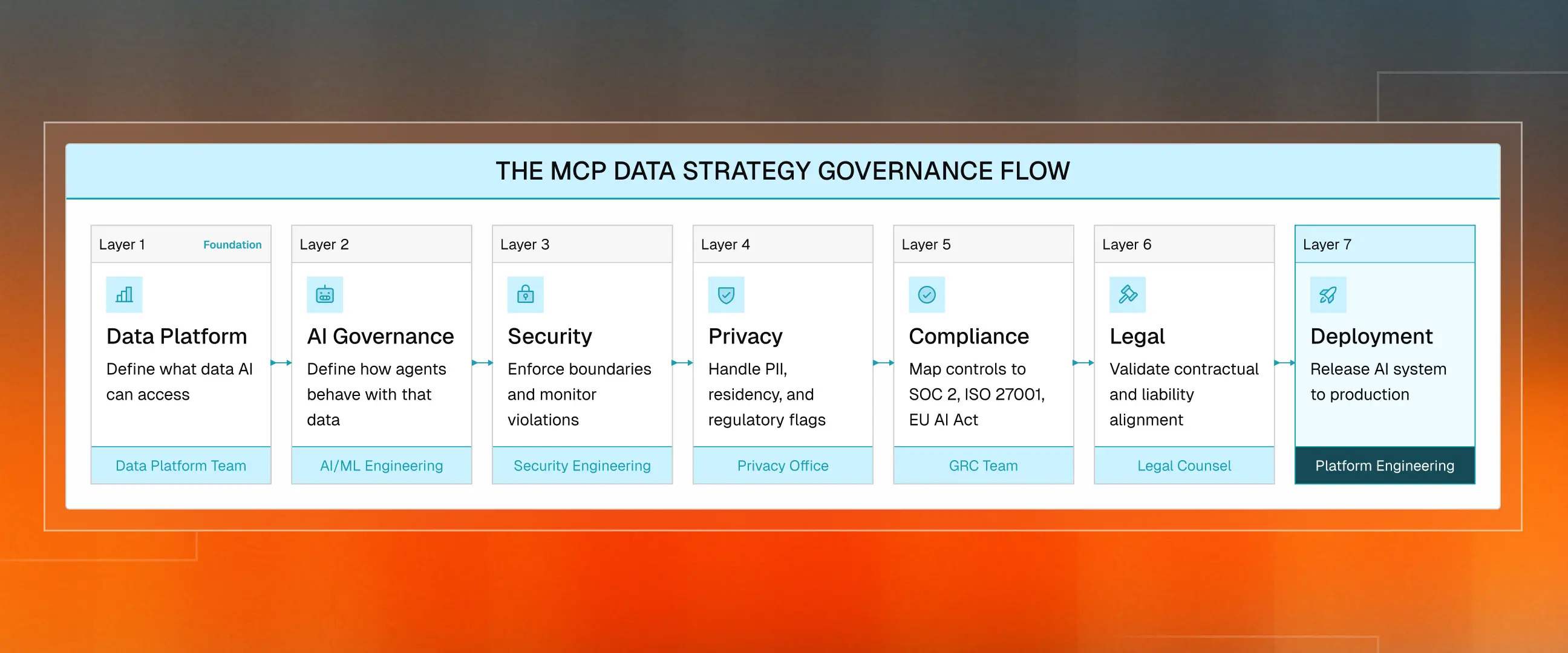

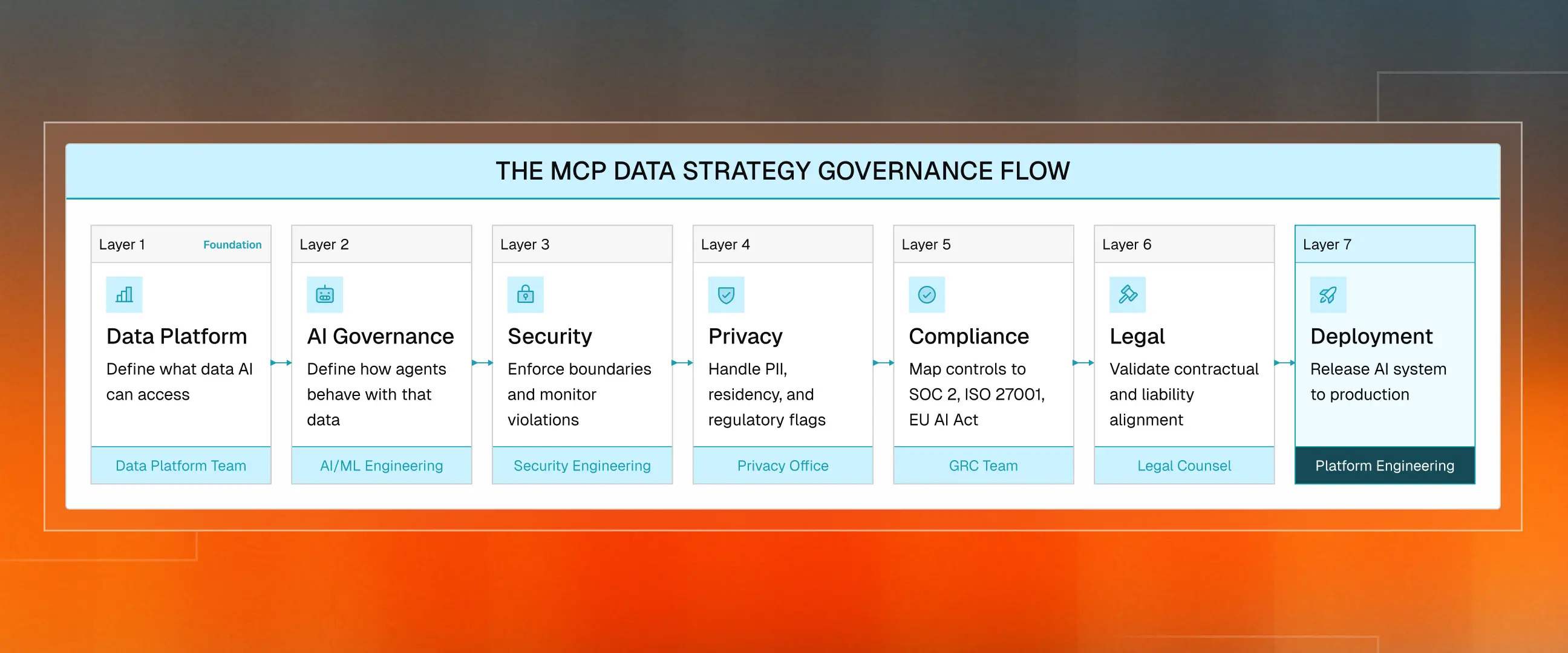

The MCP Data Strategy Governance Flow

The governance flow should be explicit:

- Data Platform establishes the foundation.

- AI Governance defines agent access rules.

- Security enforces and monitors.

- Privacy ensures regulatory data handling.

- Compliance maps controls to frameworks.

- Legal validates alignment.

- Deployment releases to production.

Each layer builds on the one beneath. Skip the foundation, and everything above destabilizes.

Reference Model for MCP Governance

The architecture requires unified governance at the data platform level, not a patchwork of tools bolted onto the edges. Delve embodies this approach: AI models inherit policies from the data they access. Lineage tracks how agents consume and act on data. Audit trails generate automatically, mapped to SOC 2, ISO 27001, and HIPAA controls.

This unification is not a feature. It is an architectural requirement for MCP-scale governance.

MCP and A2A: Protocol Convergence in AI Data Strategy

The protocol landscape is evolving towards a unified model.

MCP handles vertical integration: AI agents connecting to tools and databases. In comparison, Google's Agent-to-Agent (A2A) protocol handles horizontal integration, where multiple agents collaborate.

These protocols will coexist. An agent using MCP for database access may use A2A to coordinate with another agent. Both need governance. Both need lineage. Both create audit requirements. The data platform is where they converge.

Organizational Ownership for MCP Data Governance

This is not a tool decision. It is an organizational structure decision.

Someone owns the data platform today. That team must own an AI governance infrastructure. Reporting lines matter: they determine budget, decision rights, and accountability.

Clear ownership converts governance from abstract policy into operational capability with named individuals responsible for outcomes.

Visibility vs. Control in AI Data Strategy

Tools like Cyera show that governance visibility can extend across environments regardless of where data resides.

This matters for hybrid architectures spanning on-premises, cloud, and SaaS.

But visibility without control is monitoring, not governance. You see what happens without preventing what should not. The data platform provides the control plane. Edge tools report violations. Platform-embedded governance prevents them.

MCP Data Strategy Implementation: A 7-Step Framework

MCP connections likely exist in your environment already. Here is the sequence from exposure to governance:

- Inventory. Catalog every AI agent connection. Query identity provider logs for OAuth grants. Review browser extensions. Survey teams. You cannot govern what you have not discovered.

- Identify risk. Find data sources accessible via MCP without IT approval. Check OAuth token storage. Evaluate session expiration. Map data classification of reachable sources.

- Assign ownership. Make the data platform team the owner of AI governance infrastructure. Document it. Budget it. Governance without clear ownership means no ownership.

- Implement sandboxes. Create controlled environments where data is checked out under policy, not copied freely. Define time-bound access with automatic revocation. Build audit trails that answer: What did the agent do?

- Deploy continuous monitoring. Point-in-time audits cannot keep pace. Implement behavioral analytics for real-time anomaly detection. Integrate with your SIEM. Establish behavior baselines.

- Abstract complexity. Make governance invisible. If users navigate approval workflows and compliance forms to use AI, they will find ungoverned alternatives. Success means users never think about controls.

- Align to frameworks. Map controls to NIST AI Risk Management Framework, EU AI Act, and ISO 42001. Document lineage for auditors.

The sequence matters. Inventory before sandboxes. Ownership before monitoring. Architecture before abstraction. Each step builds on the previous. Skip one, and the governance gap persists.

Ready to Close the Gap? MCP is the future of AI data strategy. It is reshaping how AI connects to enterprise data. Delve builds governance directly into that connection. The question is whether your governance will be ready?

AI-native compliance your users never see and your auditors always can.

Summary

- Model Context Protocol (MCP) will define how AI agents access enterprise data. But without governance built into your data platform, sandbox controls limiting what agents can reach, and invisible compliance running automatically, MCP becomes a pipeline for data leakage, regulatory fines, and audit failures.

- MCP handles AI-to-data connections while Google's A2A protocol handles agent-to-agent collaboration; both will coexist, and both require governance at the data platform layer

- Critical MCP vulnerabilities are documented in tools with 500,000+ downloads, and the EU AI Act imposes penalties up to 7% of global revenue for AI governance failures.

- Treat AI data access like a gun range, not a library: data is checked out in controlled environments, sessions are time-bound, nothing leaves the sandbox, and every action is logged for data lineage.

- Invisible governance is the goal: users work without thinking about controls while audit trails answer the question regulators will ask: what did the AI agent do with that data?

- Complex approval workflows push employees toward shadow AI. Invisible governance fixes this: compliance runs automatically while users work without interruption

- The data platform team should own AI governance, not security, because they control where data lives, how it moves, and where agents connect

- Start with inventory: catalog every AI agent connection, identify ungoverned data sources, assign ownership to the data platform team, then implement sandboxes and continuous monitoring

The MCP Data Strategy Gap: What Enterprises Are Missing

Why MCP Changes AI Data Access

Somewhere in your organization right now, an employee just connected their Google Drive to an AI assistant. No ticket. No approval. No audit trail.

Model Context Protocol (MCP) made it effortless. And that employee just created an ungoverned pipeline between your sensitive data and a third-party AI model.

MCP is the future of AI data strategy because it solves a fundamental problem: connecting AI agents to tools and databases without custom integrations for every system.

Anthropic designed MCP as the universal connector. Think of it as "USB-C for AI applications" that standardizes how large language models access external context.

Microsoft integrated MCP support across Copilot Studio and Azure AI Foundry. Pre-built servers exist for Google Drive, Slack, GitHub, Postgres, and dozens more. This ecosystem expands weekly.

The MCP Security Gap: Authentication Failures

But that future has a gap. A significant one.

MCP prioritizes connectivity over governance. The protocol lets AI agents discover and invoke tools dynamically at runtime. This makes it powerful. It also makes it dangerous.

Authentication in MCP is often optional. The MCP authorization specification allows servers to use OAuth 2.0, but adoption remains limited.

Security researchers have found production MCP servers with direct database access, exposed to internal networks, requiring zero authentication.

Documented MCP Vulnerabilities: Real-World Exploits

These risks are not hypothetical. Security researchers have already found serious flaws in widely used MCP tools, documented in the CVE vulnerability database.

One vulnerability (CVE-2025-49596) affected MCP Inspector, Anthropic's official debugging tool with 38,000+ weekly downloads. The flaw let attackers run malicious code on a user's computer simply by tricking them into visiting a malicious website.

Another (CVE-2025-6514) affected mcp-remote, an authentication helper with 558,000+ downloads featured in guides from Cloudflare and Auth0. Attackers could hijack the tool to execute commands on the user's system.

MCP and the OWASP Top 10 for LLM Applications

The OWASP Top 10 for LLM Applications ranks prompt injection as the number one security risk.

In MCP environments, successful prompt injections do more than generate bad text. They trigger automated actions across every connected system. An attacker who manipulates an MCP-enabled agent can instruct it to steal data, modify records, or take actions the user never intended.

Every new MCP connection expands the attack surface.

AI Data Strategy Compliance: EU AI Act Exposure

The governance gap now carries regulatory exposure.

The EU AI Act imposes penalties up to 7% of global revenue for violations involving high-risk AI systems. GPAI transparency requirements became mandatory in August 2025.

Regulators will ask: How did AI agents access sensitive data? What did they do with it? Where is the audit trail?

"We didn't know" is not an acceptable answer.

MCP is the future of AI data strategy. But only for organizations that close the gap between what the protocol enables and what their governance covers.

The Sandbox Model: Securing MCP Data Access

Why Traditional Data Governance Fails with MCP

Here is a mental model that changes how you think about AI governance: treat data access like a gun range, not a library.

The library model describes how most enterprises govern data today. Information sits in repositories. Users get credentials. They check out what they need, use it anywhere, and governance means logging who borrowed what.

The assumption is that, once authenticated, users handle data appropriately. Policies exist. Security awareness training happens annually. But the architecture assumes good faith.

The Gun Range Model for AI Data Strategy

The gun range model operates differently. It is specifically necessary for MCP because the protocol enables what traditional governance never anticipated: persistent, dynamic connections that users create without IT involvement.

Guns can be dangerous. That's why gun ranges have rules. The environment enforces accountability because trust alone isn't enough. You do not take firearms home. You inspect them, use them in a controlled environment under supervision, and return them. Nothing leaves the premises. Every round is accounted for.

AI agents accessing your data can be dangerous too. They operate at machine speed across every connected system. The sandbox model applies the same discipline of controlled access, time-bound sessions, and full audit trails. The architecture enforces governance because policy documents alone aren't enough.

The range operator knows exactly what happens in the range because the environment enforces accountability.

.webp)

How MCP Breaks Legacy Data Access Models

MCP breaks the library model. It enables persistent, dynamic connections that traditional data governance architectures never anticipated.

Before MCP, integrations were static. IT built them. IT controlled them. IT monitored them. Connections were fixed pipelines with known endpoints.

MCP changes this. Users spin up connections on demand. AI agents discover and invoke tools at runtime. The "checkout" never ends. Data gets copied, not borrowed, often to places the organization cannot see.

Implementing Sandbox Architecture for MCP Governance

The gun range model provides the answer.

Data is accessed within sandboxed environments under complete organizational control. AI agents operate within defined boundaries with scoped permissions. Sessions have time limits with automatic revocation.

Every agent action is logged, monitored, and tied to a specific user, session, and purpose. When sessions end, access ends. Nothing persists.

.webp)

MCP Audit Trails: Answering the Regulator's Question

This approach answers the audit question regulators will ask: what did the AI agent do with that data?

Without sandbox architecture, you reconstruct events from fragmented logs. With it, you have a chain of custody. You know what entered, what happened, and what left. The receipts exist because the architecture generates them.

Technical Requirements for MCP Sandbox Implementation

Implementation requires policy-as-code frameworks such as Rego, YAML-based policies, or OSCAL that evaluate access dynamically based on context rather than static role assignments.

It requires behavioural analytics integrated with your SIEM to detect anomalous agent behaviour before data leaves the environment.

It requires data lineage tracking through every AI interaction, transformation, and output.

The tooling exists. The gap is between architectural commitment and organizational ownership.

The Accessibility Paradox: Why More Tools Hurt AI Data Governance

The Encyclopedia Britannica Problem in AI Governance

In the 1990s, Encyclopedia Britannica sat on shelves in nearly every library and many homes. Everyone had access to comprehensive knowledge.

Almost nobody used it regularly.

The barrier was not availability. It was friction. Finding information meant knowing which volume to pull, which entry to find, and how to navigate cross-references. Access was abundant. Usability was not.

The same paradox now strangles AI governance.

Tool Overload in Enterprise AI Data Strategy

Organizations have more governance tools than ever. Data catalogs track assets. Access management platforms control permissions. Compliance dashboards report adherence. Classification engines label sensitive content.

The governance stack has never been more comprehensive. Yet governance is more complex, not easier.

The governance stack has never been more comprehensive. Yet governance is harder, not easier. Why? The story is hard to read because he tools don't connect. Your data catalog doesn't talk to your access management platform. Your compliance dashboard doesn't see what your classification engine labeled. Your risks are not data-driven; they are locked in spreadsheets. When an auditor asks "trace this AI agent's data access," you're manually stitching together logs from five systems that were never designed to share context. More tools, worse traceability.

How Shadow AI Proliferates Despite MCP Governance Tools

Every new tool adds cognitive load.

Employees wanting to connect an AI agent to data now face approval workflows, classification requirements, access requests, and compliance acknowledgements. The process designed to ensure governance instead ensures avoidance.

Users work around complexity and friction because it reduces productivity. Shadow AI proliferates not because employees are malicious but because sanctioned AI is too cumbersome.

Governance tools meant to provide clarity, push activity into shadow channels.

Invisible Governance: The Goal of MCP Data Strategy

This is the accessibility paradox: more governance tooling often produces worse outcomes.

The goal of MCP governance is not to add more tools. It is invisible governance. This is the pattern Delve has observed across hundreds of enterprise implementations: governance that adds friction fails. Governance that abstracts complexity (or creates clarity) succeeds.

Invisible governance means users experience simplicity while controls run automatically.

An employee connects an AI agent. Behind the scenes: policies evaluate the request, access scopes limit visibility, session parameters define duration, behavioral monitoring watches for anomalies, and audit logs capture actions.

Users rarely consider PII, PHI, or audit trails. They work. Governance operates invisibly because it is woven in architecture, not layered on top.

AI-Native Platforms vs. Retrofitted MCP Governance

Invisible governance cannot be added later. It is built from the start.

AI-Native Platforms understand this. They expect agents to request data dynamically, at machine speed, across unpredictable paths. Legacy platforms do not. They were built for humans clicking through workflows, not agents making hundreds of calls per minute.

Retrofitting governance onto legacy architecture creates the friction that pushes users toward shadow AI. The Britannica lesson holds: access nobody uses is access wasted. Governance nobody follows is governance failed.

Building MCP Governance into Your Data Platform

Why AI Governance Belongs to the Data Platform Team

The biggest mistake with AI governance: treating it as a security initiative.

Security teams enforce policy. They monitor violations. They respond to incidents. But they cannot architect the foundation governance operates on.

The data platform team controls where data lives, how it moves, and who accesses it at the source. They understand lineage because they built the pipelines. They own the layer where AI agents connect.

MCP governance belongs with the team that owns the data platform, not the team monitoring the perimeter.

The MCP Data Strategy Governance Flow

The governance flow should be explicit:

- Data Platform establishes the foundation.

- AI Governance defines agent access rules.

- Security enforces and monitors.

- Privacy ensures regulatory data handling.

- Compliance maps controls to frameworks.

- Legal validates alignment.

- Deployment releases to production.

Each layer builds on the one beneath. Skip the foundation, and everything above destabilizes.

Reference Model for MCP Governance

The architecture requires unified governance at the data platform level, not a patchwork of tools bolted onto the edges. Delve embodies this approach: AI models inherit policies from the data they access. Lineage tracks how agents consume and act on data. Audit trails generate automatically, mapped to SOC 2, ISO 27001, and HIPAA controls.

This unification is not a feature. It is an architectural requirement for MCP-scale governance.

MCP and A2A: Protocol Convergence in AI Data Strategy

The protocol landscape is evolving towards a unified model.

MCP handles vertical integration: AI agents connecting to tools and databases. In comparison, Google's Agent-to-Agent (A2A) protocol handles horizontal integration, where multiple agents collaborate.

These protocols will coexist. An agent using MCP for database access may use A2A to coordinate with another agent. Both need governance. Both need lineage. Both create audit requirements. The data platform is where they converge.

Organizational Ownership for MCP Data Governance

This is not a tool decision. It is an organizational structure decision.

Someone owns the data platform today. That team must own an AI governance infrastructure. Reporting lines matter: they determine budget, decision rights, and accountability.

Clear ownership converts governance from abstract policy into operational capability with named individuals responsible for outcomes.

Visibility vs. Control in AI Data Strategy

Tools like Cyera show that governance visibility can extend across environments regardless of where data resides.

This matters for hybrid architectures spanning on-premises, cloud, and SaaS.

But visibility without control is monitoring, not governance. You see what happens without preventing what should not. The data platform provides the control plane. Edge tools report violations. Platform-embedded governance prevents them.

MCP Data Strategy Implementation: A 7-Step Framework

MCP connections likely exist in your environment already. Here is the sequence from exposure to governance:

- Inventory. Catalog every AI agent connection. Query identity provider logs for OAuth grants. Review browser extensions. Survey teams. You cannot govern what you have not discovered.

- Identify risk. Find data sources accessible via MCP without IT approval. Check OAuth token storage. Evaluate session expiration. Map data classification of reachable sources.

- Assign ownership. Make the data platform team the owner of AI governance infrastructure. Document it. Budget it. Governance without clear ownership means no ownership.

- Implement sandboxes. Create controlled environments where data is checked out under policy, not copied freely. Define time-bound access with automatic revocation. Build audit trails that answer: What did the agent do?

- Deploy continuous monitoring. Point-in-time audits cannot keep pace. Implement behavioral analytics for real-time anomaly detection. Integrate with your SIEM. Establish behavior baselines.

- Abstract complexity. Make governance invisible. If users navigate approval workflows and compliance forms to use AI, they will find ungoverned alternatives. Success means users never think about controls.

- Align to frameworks. Map controls to NIST AI Risk Management Framework, EU AI Act, and ISO 42001. Document lineage for auditors.

The sequence matters. Inventory before sandboxes. Ownership before monitoring. Architecture before abstraction. Each step builds on the previous. Skip one, and the governance gap persists.

21-year-old MIT dropouts raise $32M at $300M valuation led by Insight

Don't let manual compliance slow you down.

.avif)

.webp)